Login / Register

I had the opportunity to give a keynote speech at CFMS (China Flash Market Summit) | MemoryS 2024 on March 20th. The title of my speech was “Micron memory & storage: Advancing the AI revolution,” and I invite you to watch it.

One of the most interesting emerging trends, that was — certainly a topic of discussion at the show — was how the data center and edge devices, particularly AI PCs (Personal Computer) and AI smartphones, will cooperate to optimally efficiently deliver AI experiences, thereby conserving resources. This is a notable change in direction from just a few years ago when the conventional wisdom was was that all compute functions would move to the cloud. At that time, the vision of a world where was that every edge device was simply a thin client — with all applications running on the cloud, all data stored on the cloud and all devices always connected.

Perhaps in the distant future, this vision will re-emerge as trends in technology tend to oscillate. But for the foreseeable future, running every workload in the cloud simply isn’t feasible due to limitations of power consumption, cost, latency, network bandwidth and simply the reality of how quickly humanity can build out (and afford to build out) the data center and communications infrastructure. These realities are meaningful for the future of memory and storage.

The first important realization is that reducing power consumption in the data center is more important than ever. It was always an important metric to reduce total costs, conserve energy and minimize the carbon footprint of the data center. But it’s now an imperative because it’s become the limiter in terms of the ability of large cloud service providers and AI infrastructure operators to build out their infrastructure.

What does this mean?

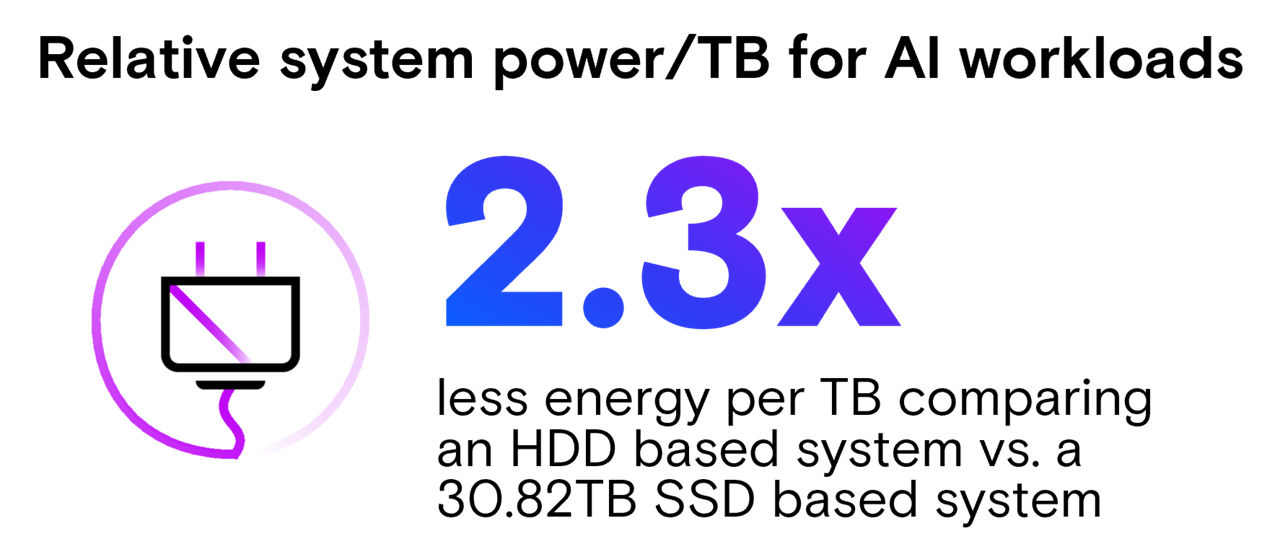

- Utilizing high-capacity SSDs over traditional HDDs significantly reduces the data center footprint—up to fivefold. While SSDs present a higher initial investment, the long-term savings in overhead, cooling, power and space are substantial, leading to a higher rate of tera operations per second (TOPS). On a power-per-usable-terabyte basis, HDDs require short stroking for optimal AI workload performance, consuming 2.3 times more power than today’s 30.82TB SSDs.1 This disparity is projected to increase fivefold when comparing HDDs to future higher-capacity SSDs. Therefore, investing in SSDs is economically prudent, considering the exorbitant costs associated with constructing additional data centers and power plants.

- Performance and capacity requirements of local storage — primarily UFS for AI phones and NVMe SSDs for AI PCs — will inevitably increase at a faster rate than previously expected. For the past five to ten years, applications for gaming and media production (think YouTube, Instagram and TikTok influencers) drove the need for more performance and capacity. But typically, those applications represented less than 20% of all users of these devices in mature markets. AI is a ubiquitous application that everyone will use, further driving the local storage requirements, just as gaming and media production applications did previously. User experience is king in this space, and that’s why Micron has focused on perfecting products for the most demanding applications and user experiences.

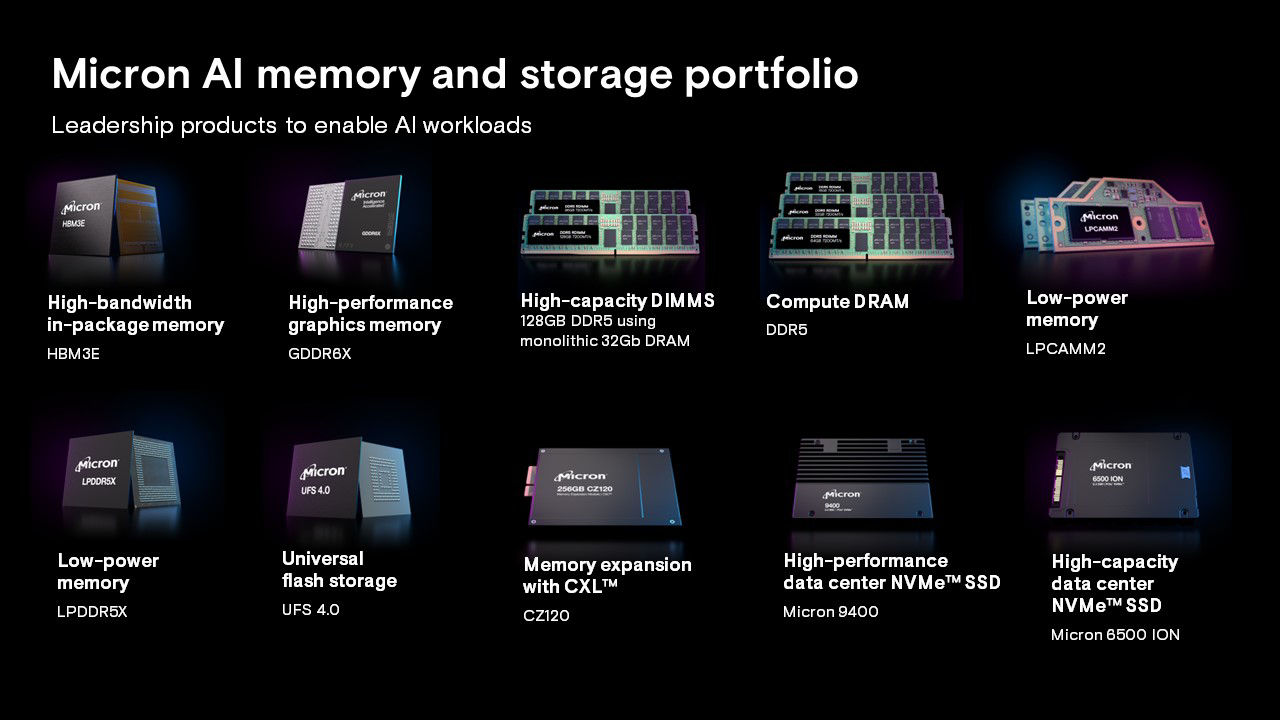

It's an exciting time for storage and innovation! The Age of Intelligence is upon us, and we can shape the future for the benefit of humankind. Memory and storage advances such as Micron’s HBM3E, high-capacity DIMMs, LPDDR5X, LPCAMM, data center SSDs, client SSDs, and UFS will certainly be critical parts of that future.

1. SSD system chassis power = 1000W with 20 SSDs/system = 50W/SSD

HDD system chassis power = 1200W with 30 HDDs/system = 30W/HDD

SSD W/TB = 50W/30.82TB, HDD W/TB = 30W/usable HDD capacity of 8TB